If you need to deploy your app to Azure cloud-services you’ll notice the installation drive will yo-yo between E: and F: each time you deploy. These drives are always created with rather limited capacity – roughly 1.5GB.

Certain Sitecore operations push a lot of data to disk, things like: logging, media caches, icons, installation history and lots more. As part of your deployment I’d suggest you setup the following changes:

- Move the data folder to another location

- This can be patched in with the sc.variable dataFolder

- Move the media cache

- This can be patched in with setting Media.CacheFolder

- Remember to also update the cleanup agents which look to delete old file based media entries. These are referenced in the CleanupAgent.

- Move the temp folder

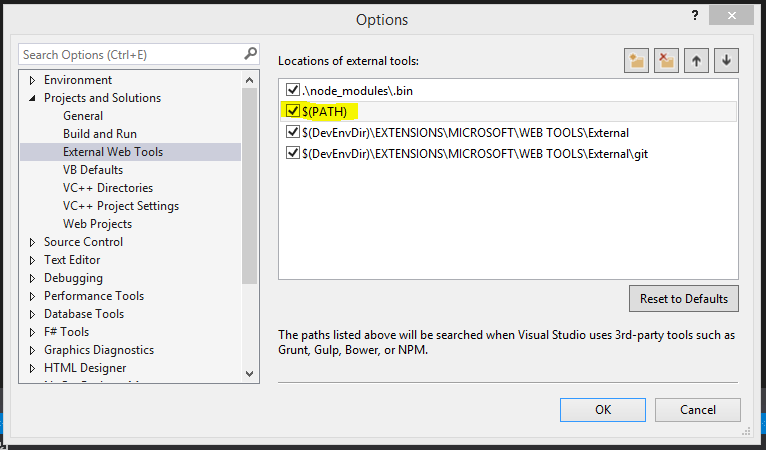

- Now, this isn’t quite so simple. The reason for noting this item is installing update files creates large __UpgradeHistory folders. Also, things like icons are cached in the /temp folder. For this reason /temp needs to live as /temp under the site root. With some help from support they concluded that rather than a virtual directory, a symlink was the best approach. For details on setting one up via powershell, see http://stackoverflow.com/questions/894430/powershell-hard-and-soft-links. As part of our startup scripts we included calling the script to shift things around.

- The clue a folder is a symlink is the icon will change ala: