In the latest version of Octopus deploy you can now choose to run script steps where the files exist in a package. This might sound like a minor change but opens up some very neat options. You can read more on the details of the change in https://octopus.com/blog/octopus-deploy-3.3#ScriptsInPackages. Note at the time of writing this is only available in the beta of 3.3 (https://octopus.com/downloads/3.3.0-beta0001)

Ok, so why is this such a good thing?

Step templates are great – there is even a large library of pre-existing templates to download (https://library.octopusdeploy.com/#!/listing). If you’ve not used them before, step templates allow additional scripts to run during your deployment.

Examples could be: post to slack, create certain folders, delete given files etc. Basically anything you can achieve with powershell can be done in step templates.

Lets just stick with step templates then?

If you’ve gone through the process of setting up several deployments with Octopus and find you want to replicate the same functionality across several projects or installs then you need to re-create all the step template configurations each time. It’s not the slowest process but the idea below helps streamline things.

Now that you can run scripts from a package, why not source control the steps you want to run? One key advantage is that you can then see things like history of all the deployment steps.

What needs setting up?

You need to be running version 3.3 or higher of Octopus – see above for the link.

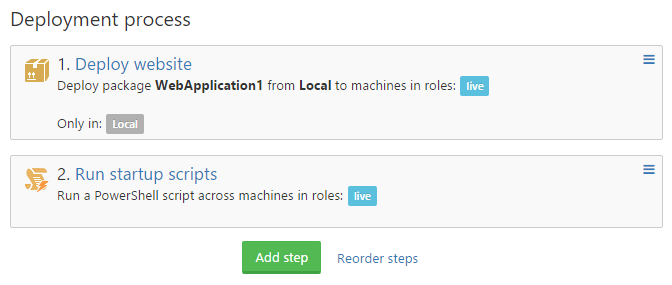

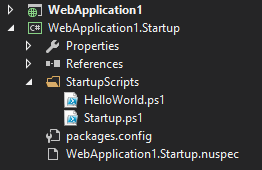

I’ve been using a simple test deployment of an out the box MVC project along with a new project specifically for the scripts.:

The first is a vanilla website deployment of ‘WebApplication1’. The second the startup scripts:

Note the package id. The idea behind using a separate projects is that the powershell scripts never need to exist in the website project.

I chose to use a class library for the simple reason that I could include a reference to Octopack and hence building the output nuget file was trivial.

The nuspec file is important as it tells the packaging to include all powershell files:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

<?xml version="1.0"?> <package xmlns="http://schemas.microsoft.com/packaging/2010/07/nuspec.xsd"> <metadata> <id>WebApplication1.Startup</id> <title>TODO Your Web Application</title> <version>1.0.0</version> <authors>TODO Your name</authors> <owners>TODO Your name</owners> <licenseUrl>http://TODO.com</licenseUrl> <projectUrl>http://TODO.com</projectUrl> <requireLicenseAcceptance>false</requireLicenseAcceptance> <description>TODO A sample project</description> <releaseNotes>TODO This release contains the following changes...</releaseNotes> </metadata> <files> <file src="**\*.ps1" target="\" /> </files> </package> |

Packages simply contains a reference to Octopack:

|

1 2 3 4 |

<?xml version="1.0" encoding="utf-8"?> <packages> <package id="OctoPack" version="3.0.53" targetFramework="net46" developmentDependency="true" /> </packages> |

And finally the scripts:

Helloworld.ps1

|

1 2 3 4 5 6 7 8 9 |

Write-Host "Hello World PS script example" if ($OctopusParameters) { ##enable the next line to show all Octopus variables #$OctopusParameters.GetEnumerator() | Sort-Object Name | % { "$($_.Key)=$($_.Value)" } } #use this approach to pull in additional script files . .\Startup.ps1 |

And the more important one, Startup.ps1

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 |

function createFolderIfNotExists{ param([string]$folder) if (!(Test-Path $folder)) { Write-Host "Creating $folder" New-Item -Path $folder -ItemType Directory } else { Write-Host "Directory already exists!" } } function assignReadPermissionsTo { param([string] $item, [string] $readPermissionsTo) if(!(Test-Path $item)) { throw "$item does not exist" } $users = $readPermissionsTo.Split(",") foreach($user in $users) { Write-Host "Adding read permissions for $user" $acl = Get-Acl $item $acl.SetAccessRuleProtection($False, $False) $rule = New-Object System.Security.AccessControl.FileSystemAccessRule($user, "Read", "ContainerInherit, ObjectInherit", "None", "Allow") $acl.AddAccessRule($rule) Set-Acl $item $acl } } function assignWritePermissionsTo { param([string] $item, [string] $writePermissionsTo) if(!(Test-Path $item)) { throw "$item does not exist" } $users = $writePermissionsTo.Split(",") foreach($user in $users) { Write-Host "Adding write permissions for $user" $acl = Get-Acl $item $acl.SetAccessRuleProtection($False, $False) $rule = New-Object System.Security.AccessControl.FileSystemAccessRule($user, "Write", "ContainerInherit, ObjectInherit", "None", "Allow") $acl.AddAccessRule($rule) Set-Acl $item $acl } } $foldersToCreate = @("_logs","test\deep") $usersToGiveAccess = "IIS_IUSRS" if ($OctopusParameters) { $installFolder = $OctopusParameters['Octopus.Action[Deploy website].Output.Package.InstallationDirectoryPath'] Write-Host "Environment: " $OctopusParameters['Octopus.Environment.Name'] Write-Host "Deployment folder: " $installFolder Foreach ($folder in $foldersToCreate) { $newFolder = [io.path]::combine($installFolder,$folder) createFolderIfNotExists -folder $newFolder assignWritePermissionsTo -item $newFolder -writePermissionsTo $usersToGiveAccess } } $PSScriptRoot = Split-Path -Parent -Path $MyInvocation.MyCommand.Definition Write-Host "Current running folder is: " $PSScriptRoot |

It’s worth noting this should be considered a POC of the approach. The next steps would be to split the scripts up into more meaningful units, remove hello world and update the nuspec with more valid information.

If you struggle with accessing the Octopus Parameters you require, the script in helloworld allows you to dump out all parameters and their values. In the startup script the parameter: $OctopusParameters[‘Octopus.Action[Deploy website].Output.Package.InstallationDirectoryPath’] depends on the name of your deployment in step 1 of your deployment process (Deploy website)