Following on from the previous post, AlexaCore, this post will explain some of the challenges you might encounter when launching Alexa Skills into the store. It will also cover some cool things you can do if you want to enrich the feedback users receive as they use their skill.

Time for a brew?

If you need to solve your debate of who makes the tea why not check out Tea Round, recently enabled on the Skill Store.

First up, how can you find skills?

They are all available on the Amazon site, or accessible via the alexa.amazon.com portal.

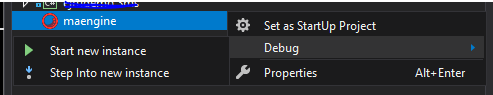

Running test versions of your skill

This is pretty straight forwards. You need to login to developer.amazon.com, run through the wizard to create a skill which includes pairing up with an AWS Lambda (or controller endpoint). You should then see the newly created skill in your Alexa app but marked with a ‘dev’ flag.

Testing your skill

You have a few options, either you can simply talk to your Alexa with voice commands or use the text test tool within the developer.amazon.com console.

Getting certified gotcha’s

The certification process looks to validate and check a few things:

- Are there bugs in the skill?

- Do the descriptions and prompts align with your skill’s intents?

- Do you leave the users hanging?

Things that caught me out during this process were:

- Testing the skill where you skip past the launch intent

- E.g. rather than asking ‘Alexa, open the tea round’ and then allowing the LaunchIntent to run, you can ask ‘Alexa, open the tea round and spin the wheel’. My logic around initializing the session originally ran in the LaunchIntent, some simple refactoring resolved this.

- Leaving the users hanging – in my opinion this isn’t great UX but rules are rules

- If you respond to a user without a prompt e.g. without a request of the user, the rules define you should end the session. My AddIntent would respond to ‘Add Nick’ with ‘Ok, Nick is now in the team’. To get past the certification it needed updating to ‘Ok, Nick is now in the team. Why not spin the wheel?’

- Make sure the suggested prompts you include match up to the text set in your intents. The best bet here is to look at other skills and see how they phrase the prompts.

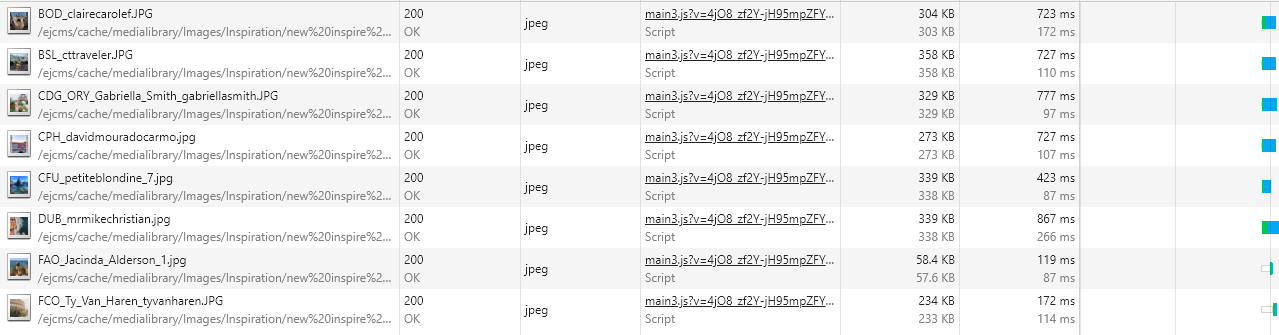

Saving data beyond a session

Much like a session in a web request, for the context of the lifetime of a skill a session gets persisted. This can be used to store anything you want, some simple examples would be an array of the names of the people in the Tea Round. That’s fine, but next time someone loads the skill, the session will be empty and the user will need to re-add each user – with all the extra questioning needed for certification this could get painful.

AWS provide a document model DB, Dynamo, that’s very well suited to this kind of thing. The Tea Round stores the permanent team in Dynamo, updated from the Lambda function that sits behind the skill.

Understanding users names

This can be tricky as subtle variations behind names can lead to them being spelt, and pronounced very differently – especially when regional dialect comes into play. The best success I’ve found is to provide as broad a set of example names as possible when setting up your {slots} in the intent.

Enriching the responses

A typical request & response cycle will send information that Amazon decodes from speech into your Lambda function. From there you can then return text that gets read out by your Alexa. By using Sitecore as a headless backend, the response text can be driven from the CMS – some recent updates to AlexaCore provide helpers for making these requests.

Where things then get interesting are that you can personalize messaging based off behaviour and user interactions. Big brother is watching?!?!

Closing thoughts

Gasp, after all that I’m thirsty – time for a brew! (how English eh :))

If you fancy allowing Alexa into your tea making process, have a look at Tea Round